Deep Learning for Time-Dependent Behavioural Data – Semester Project

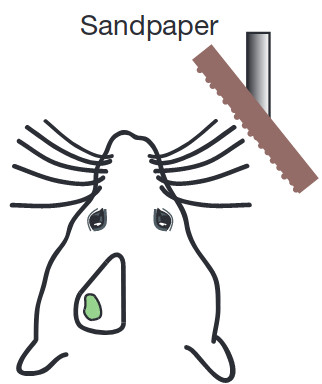

With recent advances in deep learning it is now possible to significantly automatize tracking of animal behaviour. For example, DeepLabCut (DLC) has been successfully used by many labs to automatically identify selected points in videos of behaving animal with a training set of just a few hundred human-labeled frames. The main assumption of DLC is that the features of interest can be identified from individual frames, so the algorithm considers only one frame at a time. However, there exist other tracking-related questions, such as event detection, for which the above assumption is insufficient for good performance. Several of our experiments require precise detection of the first moment in time the whiskers of a mouse touch a certain object. This task is challenging to solve on a frame-by-frame basis, since occasional false positives of touch estimation can have devastating effects on the estimate of the first touch moment. When humans are performing the labelling, information from previous frames, such as velocity of the whisker or rate of change of its angle, is often used to estimate if a touch has occurred.

The student will design a deep learning pipeline which will consider multiple frames simultaneously to decide on the timing of the first touch event. The student will then apply the method to our labeled and unlabeled data, validating and optimizing the pipeline. The exact implementation details of the pipeline will be the creative work of the student.

Research Group:

Laboratory of Neural Circuit Dynamics (Prof. Fritjof Helmchen) at the Brain Research Institute, University of Zurich (Irchel Campus, building 55).

Requirements

Experience python and Deep Learning

Contact

Philipp Bethge (bethge@hifo.uzh.ch)