Semster Project: Development of a Web Application for Evaluating and Actively Adapting Personalized ASR Models Motivation

Automatic speech recognition (ASR) plays a central role in enabling human-computer interaction. Yet, children's speech, especially when affected by disabilities, is highly variable and adaptive, which poses challenges for conventional models, trained on adult normative speech. In our research group, we have developed an approach to personalize a base ASR model specifically for children with speech impairments on limited data availability. Yet, children's speech, especially when affected by disabilities, is highly variable and adaptive, which poses challenges for conventional systems and requires active learning. To test the active learning approach that continuously refines the model based on user feedback and as a proof of concept for real-world use, we aim to develop a web application. This allows users to test their personalized model, upload speech samples following poor transcription results, and trigger further fine-tuning.

The Project

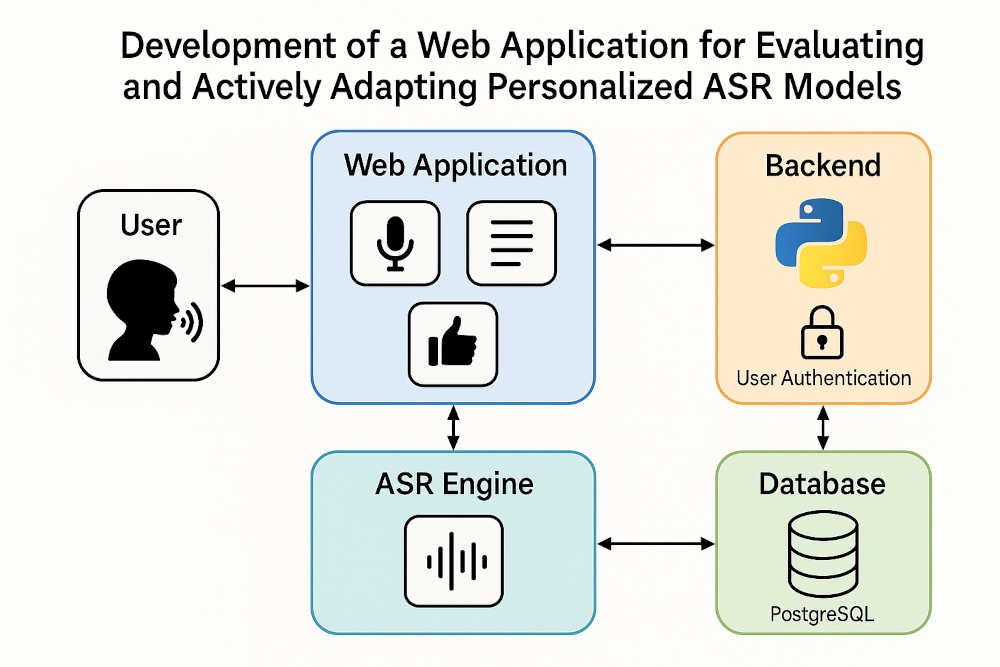

The goal of this project is to design and implement a full-stack web application that enables users to interact with personalized ASR models. The application will feature a React-based frontend for recording or uploading audio, displaying ASR outputs, and collecting user feedback. A Python backend (using Flask or a similar framework) will handle user authentication, data routing, and communication with the ASR engine. The system will integrate with a PostgreSQL database to manage user accounts, uploaded data, and model metadata. It will be designed to support a modular structure that allows multiple users to access and adapt their own ASR models, including active learning capabilities for model retraining. The final application will be deployed on a secure, Linux-based virtual machine. Required Skills

- - Experience with web development (HTML, CSS, JavaScript/TypeScript, React)

- - Basic knowledge of Python backend development (preferably Flask or FastAPI)

- - Familiarity with databases (PostgreSQL, SQLAlchemy or equivalent ORM)

- - Interest in speech technology or machine learning (no deep ML knowledge required)

- - Ability to work independently and in collaboration with an interdisciplinary team

Optional/Plus

- - Docker or VM deployment experience

- - Experience with REST APIs or handling audio data in the browser

- - Research experience in human-computer interaction (HCI) is highly wanted.

- - This project can potentially lead to a publication at ACL/EMNLP System Demonstration track or top HCI conferences such as ACM CHI or ASSETS

Contact

If you are interessted or would like to know more about this semster project, please contact Roman or one of the other supervisor:

Roman Boehringer (Grewe Group): roman@ini.ethz.ch

Yingqiang Gao (Institute for Computerlinguistics): yingqiang.gao@uzh.ch

Pehuen Moure (Sensors Group): pehuen@ini.ethz.ch