Brain-inspired online training algorithms for neuromorphic hardware

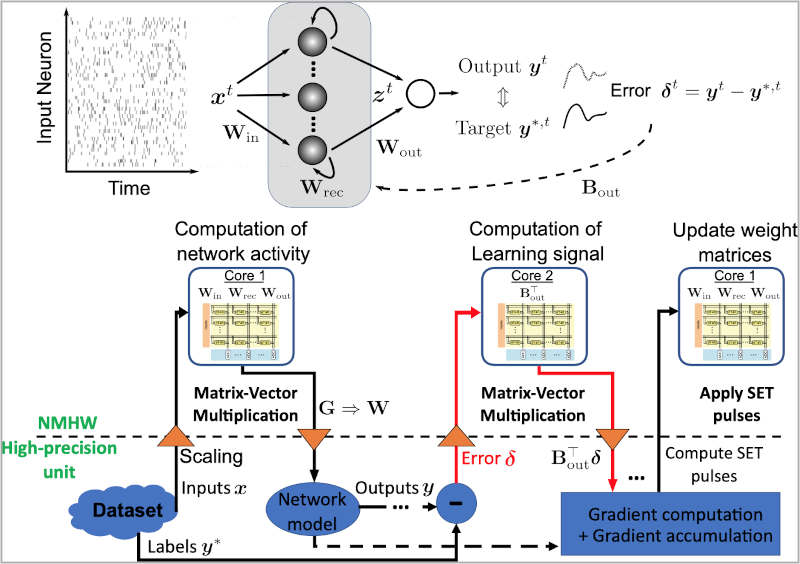

Illustration of the in-memory computing neuromorphic hardware setup.

Artificial Neural Networks (ANNs) are at the heart of today’s intelligent systems that have demonstrated state-of-the-art super-human performance in several challenging domains. These networks were initially inspired by the operating principles of the human brain but remained only remotely connected to the recent neuroscientific findings. The lack of ability to train ANNs online from incoming sensory data streams in low-power settings has limited the widespread applicability of battery-powered smart devices at the edge. On the other hand, Spiking Neural Networks (SNNs) suit low-power processing tasks better due to their inherent temporal dynamics and sparse communications. With the recent developments in online training SNNs and hardware accelerators equipped with resistive memory technologies, a new promising path to realize low-power, brain-inspired cognitive systems have emerged.

The aim of this project is to combine insights from neuroscience with advances from hardware accelerators to form a powerful system capable of solving challenging real-world tasks. The ultimate goal is to build a proof-of-concept system, demonstrating online learning, leveraging in-memory neuromorphic hardware. To do so, we will be taking advantage of the Phase Change Memory (PCM) devices, one of the most mature resistive memory technologies, and leverage biologically-plausible neural networks as well as online learning algorithms, such as e-prop [1] or OSTL [3]. Following the developments of our recent work [2], we will investigate methods to increase the energy-efficiency as well as the performance of the employed SNNs and learning algorithms. We will also realize the network architecture with PCM devices using an in-memory computing neuromorphic hardware set-up [4], developed at IBM Research, Zurich. This requires investigating the performance of the developed algorithms under hardware constraints. To demonstrate the performance of the developed networks and learning algorithms, common regression or classification tasks will be used, with a focus on online adaptation.

This Master’s thesis project will be conducted in IBM Research Zürich premises in Rüschlikon, Zürich.

Supervisor: Prof. Giacomo Indiveri (INI) and Dr. Angeliki Pantazi (IBM Research)

Co-supervisors: Thomas Bohnstingl (IBM Research), Yigit Demirag (INI) and Melika Payvand (INI)

Requirements

• Strong programming skills in Python.

• Experience with the TensorFlow machine learning framework.

• Analytical and in-depth problem solving skills.

• Excellent communication and team skills.

Tasks involved in this project

• Study of online training algorithms (e-prop and OSTL) for SNNs

• Develop algorithmic methods to increase the efficiency of online learning on the analog hardware (e.g., reducing the need for a digital full-precision co-processor)

• Improve the performance of the training algorithms through advanced feedback signals

• Investigate the impact of analog non-idealities (limited bit-precision, noise and device non-linearities)

• Develop a proof-of-concept neuromorphic system with the computer in-the-loop

References

[1] Guillaume Bellec, Franz Scherr, Anand Subramoney, Elias Hajek, Darjan Salaj, Robert Legenstein, and Wolfgang Maass. A solution to the learning dilemma for recurrent networks of spiking neurons. Nat. Commun., 11(3625):1–15, July 2020.

[2] Thomas Bohnstingl, Anja Surina, Maxime Fabre, Yigit Demirag, Charlotte Frenkel, Melika Payvand, Giacomo Indiveri, and Angeliki Pantazi. Biologically-inspired training of spiking recurrent neural networks with neuromorphic hardware. AICAS, May 2022.

[3] Thomas Bohnstingl, Stanislaw Wo´zniak, Angeliki Pantazi, and Evangelos Eleftheriou. Online Spatio Temporal Learning in Deep Neural Networks. IEEE Trans. Neural Networks Learn. Syst., pages 1–15, March 2022.

[4] R. Khaddam-Aljameh, M. Stanisavljevic, J. Fornt Mas, G. Karunaratne, M. Braendli, F. Liu, A. Singh, S. M. Muller, U. Egger, A. Petropoulos, T. Antonakopoulos, K. Brew, S. Choi, I. Ok, F. L. Lie, N. Saulnier, V. Chan, I. Ahsan, V. Narayanan, S. R. Nandakumar, M. Le Gallo, P. A. Francese, A. Sebastian, and E. Eleftheriou. HERMES Core – A 14nm CMOS and PCM-based In-Memory Compute Core using an array of 300ps/LSB Linearized CCO-based ADCs and local digital processing. In 2021 Symposium on VLSI Technology, pages 1–2. IEEE, June 2021.

Contact

Please contact Melika Payvand and Yigit Demirag with

{melika, yigit}@ini.uzh.ch.