High-speed tracking of head pose and facial expression using dynamic vision sensor event cameras

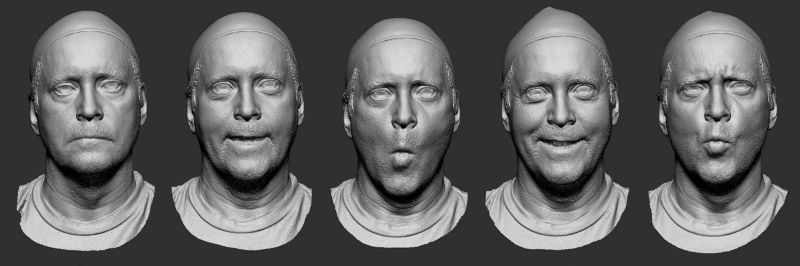

We (Grewe and Delbrück group) are looking for a student (Master level) who is interested in carrying out a collaborative technological development project at the Institute of Neuroinformatics. The goal of this project is to develop a fast machine learning system (hardware and software) that is able to detect the pose of (direction of head) and a predefined set of hu/77601man facial expressions from video data within milliseconds. This information can then be used to, for example, to control a robotic arm, steer a wheel chair or to play the Cybathlon game. The system will consist of a dynamic and active pixel vision sensor (DAVIS) that is able to record changes in head pose and facial expressions (portrait arrangement) with millisecond precision. The dynamic DVS video data will then be forwarded to a deep network that runs on specialized hardware accelerators that allow inference within milliseconds. The project requires the generation of a new DVS training dataset in order to train the deep network to detect a pre-defined set of facial expressions and head pose (e.g. lips moving up, left, down or right). The advantage of the combined hardware/software imaging/machine-learning approach is a gain in quickness of response and thus dynamic control of the actuator, as well as operation under lower and more uneven lighting conditions. Performing high-speed pattern recognition on complex visual data is not be possible with standard CMOS cameras that typically record at video rates (30-60 Hz). One possible application could be to allow handicapped persons to rapidly control a robotic actuator or to drive a robotic car/wheel chair by changing their facial expression.

Responsibilities:

• Find suitable facial expressions that allow a fast and easy readout.

• Generate a facial expression training dataset for the DAVIS (images&labels)

• Train a deep net classifier to distinguish facial expression based on the DVS data

• Interface the DVS with a FPGA board for fast classification.

• Interface the output of the FPGA classification device with the cybathlon controller

What we offer

• The interdisciplinary and collaborative environment at the intersection of engineering, neuroscience, and machine learning

• A highly motivated research team and cutting-edge research project

• The potential for continuing work at the INI

Start of Project: Immediately

Length of Project: 6-9 months,

Contact: bgrewe@ethz.ch and tobi@ini.uzh.ch

Paid position:

Interested students with an EE background and experience in machine learning as well as FPGA/GUI programming are especially encouraged /77601to contact us. Please attach a CV, short motivation and background (<0.5 page). If you have any questions about the project, do not hesitate to contact us.